Participate

This is the tenth year of eRisk and the lab plans to organize three tasks:

Task 1: Conversational Depression Detection

- First Window Release: Monday, February 16, 2026

- Full Details: Access all details here

This task extends last year's pilot by detecting depression through conversational agents while improving access and reproducibility. Participants will interact with LLM personas fine-tuned with diverse user histories and released on Hugging Face. Each model will be released on a different day, and participants will have limited days before giving their predictions.

The challenge is to determine whether each persona exhibits signs of depression and, within a limited conversational window, identify active depressive symptoms and the overall depression level. The LLM personas will reflect different severity levels guided by the BDI-II questionnaire, allowing systems to be evaluated across a spectrum of simulated depression.

Teams will download the released persona models, conduct their interactions, and submit predictions a few days later. Evaluation will focus on two key aspects: (i) accurate identification of depressive symptoms present in the persona (if any) and (ii) the overall depression level of the persona, following BDI-II standards.

A limited number of runs will be accepted, with both fully automated and manual-in-the-loop variants permitted, encouraging exploration of conversational strategies while maintaining comparability across submissions.

The proceedings of the lab will be published in the online CEUR-WS Proceedings and on the conference website.

To have access to the collection, all participants have to fill, sign, and send a user agreement form (follow the instructions provided here). Once you have submitted the signed copyright form, you can proceed to register for the lab at CLEF 2026 Labs Registration site.

Important DatesTask 2: Contextualized Early Detection of Depression

Check all the details here: Click Here

This is the second edition of the contextualized early detection task, first introduced in eRisk 2025.

This task focuses on detecting early signs of depression by analyzing full conversational contexts. Unlike previous tasks that focused on isolated user posts, this challenge considers the broader dynamics of interactions by incorporating writings from all individuals involved in the conversation. Participants must process user interactions sequentially, analyze natural dialogues, and detect signs of depression within these rich contexts. Texts will be processed chronologically to simulate real-world conditions, making the task applicable to monitoring user interactions in blogs, social networks, or other types of online media.

The test collection for this task follows the format described in Losada & Crestani, 2016 and is derived from the same data sources as previous eRisk tasks. The dataset includes:

- 1. Writing history from the specific user: Posts from specific target social media users for depression estimation.

-

2. Full conversational contexts:

- The discussion title and comment.

- Comments from all users participating in the conversation, in chronological order.

- Messages exchanged between the specific target user to classify and others in the discussion.

There are two categories of users: individuals suffering depression and control users. For each user, the collection contains a sequence of writings from that specific user along with the rest of the users that participated in the conversation (in chronological order). This approach allows systems to monitor ongoing interactions and make timely decisions based on the evolution of the conversation.

The task is organized into two different stages:

-

Training Stage. Participants will be provided with the contextualized dataset from eRisk 2025, which includes full conversational contexts. This collection serves as training data to enable reproducible development and validation.

- The training data includes both isolated writings from users and their full conversation contexts.

- For each user, the dataset indicates whether they have explicitly mentioned a depression diagnosis.

- Training data may also include users from prior early depression detection tasks, allowing teams to train their systems effectively.

-

Test Stage. During the test phase, participants will connect to our server that provides user writings iteratively, including full conversational contexts (e.g., the discussion title and other users' comments).

Participants have to:

- Analyze the data in real time and send their predictions after processing each writing.

- Use the provided full conversational contexts for each user interaction, simulating real-world scenarios.

Evaluation: The evaluation will consider not only the correctness of the system's output (i.e., whether or not the user is depressed) but also the delay taken to emit its decision. To meet this aim, we will consider the ERDE metric proposed in Losada & Crestani, 2016 and other alternative evaluation measures. A full description of the evaluation metrics can be found in 2021's eRisk overview.

The proceedings of the lab will be published in the online CEUR-WS Proceedings and on the conference website.

To have access to the collection, all participants must fill, sign, and send a user agreement form (follow the instructions provided here). Once you have submitted the signed copyright form, you can proceed to register for the lab at CLEF 2026 Labs Registration site.

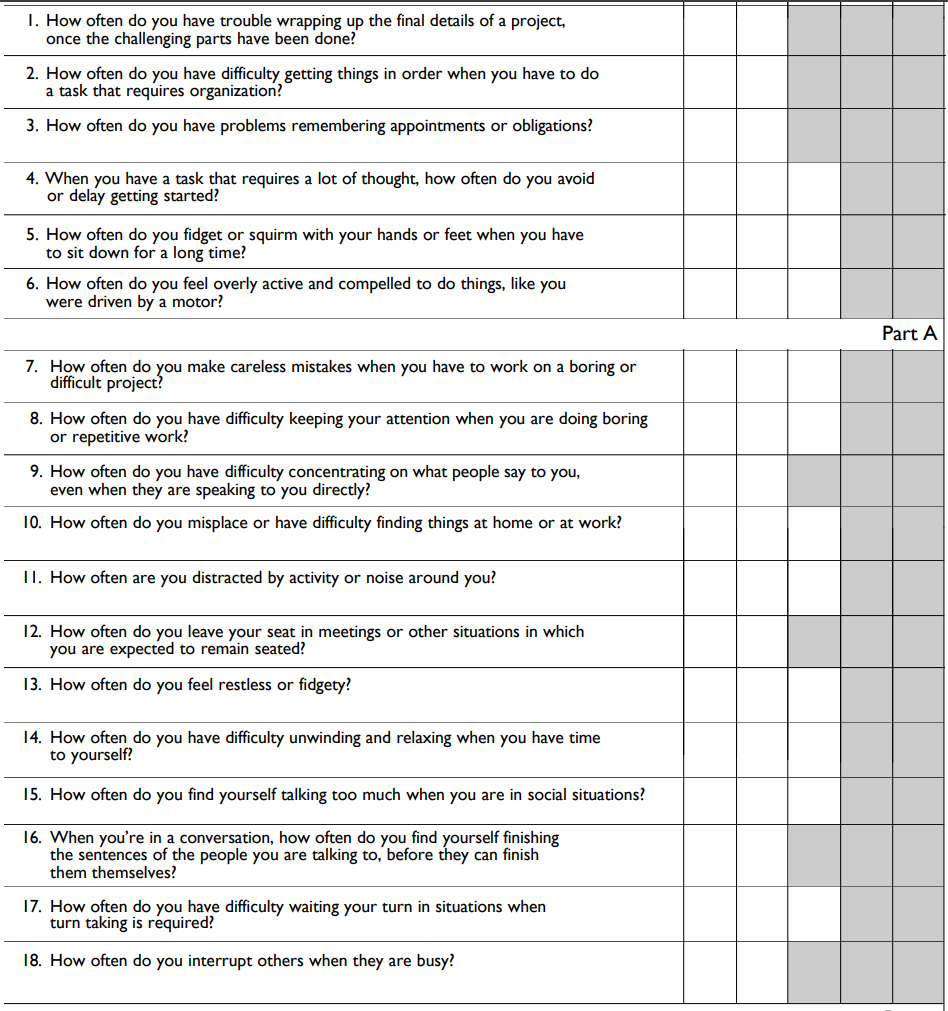

Important DatesTask 3: ADHD Symptom Sentence Ranking

This new task targets sentence-level retrieval for the 18 symptoms defined in the Adult ADHD Self-Report Scale (ASRS-v1.1). Participants must rank candidate sentences by their relevance to each symptom. A sentence is considered relevant when it conveys information about the user's state with respect to the target ADHD symptom (irrespective of polarity or stance), encouraging models to capture clinically meaningful evidence rather than surface keywords.

You can view the ADHD questionnaire here or download the official PDF from this link.

We will release a sentence-tagged dataset derived from publicly available social media writings, collected to contain ADHD-related expressions. As this is the first edition of the ADHD ranking task, no annotated training data will be provided. The release will consist solely of the test inputs, following the formatting conventions of recent eRisk ranking tasks.

Participants will submit 18 rankings (one per ADHD symptom) ordering candidate sentences by decreasing likelihood of relevance. Relevance assessments will be produced via top-k pooling and expert annotation, and systems will be evaluated using standard IR metrics such as MAP, nDCG (e.g., @100), and P@10.

The task is organized into two different stages:

-

Submission stage. After the release of the datasets, the

participants will have time to produce and upload to our

FTP server

their TREC-formatted runs. Each participant may upload up to 5

files corresponding to 5 systems to the

FTP.

The required submission TREC format is as follows:symptom_number Q0 sentence-id position_in_ranking score system_nameAn example of the format of your runs should be as follows:

1 Q0 sentence-id-121 0001 10 myGroupNameMyMethodName 1 Q0 sentence-id-234 0002 9.5 myGroupNameMyMethodName 1 Q0 sentence-id-345 0003 9 myGroupNameMyMethodName ... 18 Q0 sentence-id-456 0998 1.25 myGroupNameMyMethodName 18 Q0 sentence-id-242 0999 1 myGroupNameMyMethodName 18 Q0 sentence-id-347 1000 0.9 myGroupNameMyMethodNameParticipants should submit up to 1000 results sorted by estimated relevance for each of the 18 symptoms of the ADHD questionnaire (ASRS-v1.1). Each line contains: symptom_number, Q0, sentence-id, position_in_ranking, score, system_name.

- Evaluation stage. Once the submission stage is closed, the submitted runs will be used for obtaining the relevance judgments using classical pooling strategies with human assessors. With those judgments, systems will be evaluated.

By extending symptom-oriented retrieval beyond depression to ADHD, this task advances interpretable, symptom-aware retrieval and supports cross-condition generalisation at sentence granularity.

The proceedings of the lab will be published in the online CEUR-WS Proceedings and on the conference website.

To have access to the collection, all participants must fill, sign, and send a user agreement form (follow the instructions provided here). Once you have submitted the signed copyright form, you can proceed to register for the lab at CLEF 2026 Labs Registration site.

Important Dates